Reinforcement Learning at Lyft

Posted: Updated:

I created my YouTube series on Reinforcement Learning because I saw it applied profitably at Lyft. It was a counterexample to the stigma:

RL is only good for scenarios where a perfect simulator can be accessed endlessly. It’s general-but-slow trial-and-error.

There’s truth here, but I believe the stigma will fade due to things like.. Lyft’s recent paper explaining a successful application of RL:

A Better Match for Drivers and Riders: Reinforcement Learning at Lyft

An Overview

-

They address the problem of matching. When a ride is requested, which driver should be matched? This comes down to repeatedly solving a bipartite matching problem, a classic optimization.

-

The optimization requires specifying weights, each of which indicates the value of matching a particular driver with a particular request.

-

These weights can be determined from action-value estimates, quantities produced when training an RL agent, if the RL problem is defined properly.

-

Specifically, their agents represent drivers and their state is a driver’s place in space and time. The agent actions are to accept/ignore a request. The value of an action is the expected earnings.

-

They train their agents “online,” meaning the agents are continuously updating themselves, which creates challenges (e.g. model monitoring must be hard) that dissuade most. For them, this provides a real-time adaptivity to the market.

-

With trained agents, they can generate improved bipartite matching weights.

-

Evaluating this is hard. You can’t A/B test it. You need to at least put an entire market onto/off the RL approach repeatedly.

-

It works! They saw 13% fewer instances where a driver couldn’t be matched with a rider. This and other effects equate to 30 million in additional annual revenue.

Some Observations

-

It’s no surprise it’s not a fully RL solution. You might expect an RL problem where there’s one agent, one who’s actions are all possible matching decisions. This would be totally unlearnable, due to the size of the action space. They keep the state-space and action-space small by settings agents = drivers. Plus, if you can frame something as a bipartite matching problem, you get to apply powerful solvers.

-

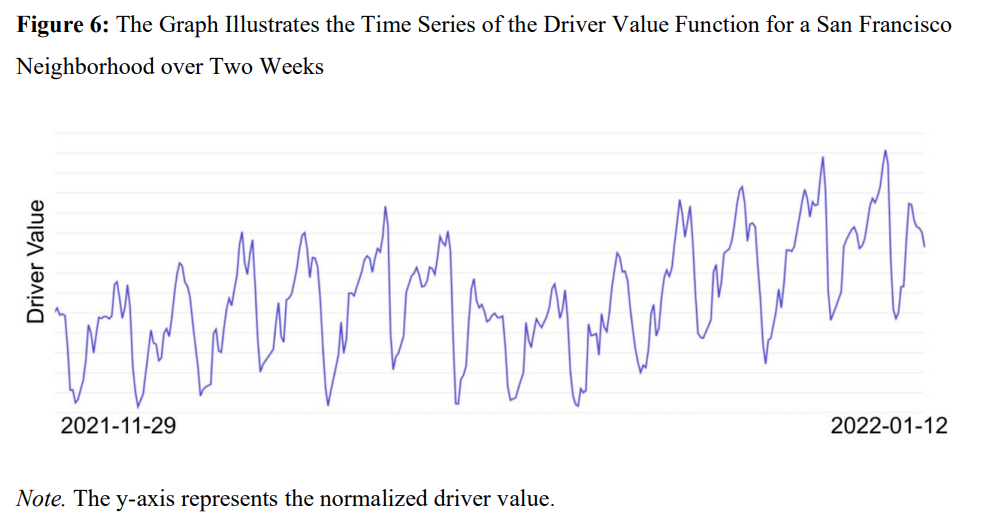

Value-based RL comes with interesting intermediate quantities, like action-values or state-values. For them, they are useful intuitive-checks. They have a sense of when a driver is more/less valuable and if their intuition is matched, they gain confidence. This plot makes sense to them:

- The market changes over time and RL agents need time to learn these changes. How fast it responds depends on how it represents the market. Given this is all done online and evaluation is hard, I suspect managing the bias-variance tradeoff is especially difficult.

Well done!

This effort took years, and I know the challenge of developing something tested against a nonstationary, partially observed, self referential, adversarial and complex real world system. This is an impressive feat; well done to the team!