TrueSkill Part 2: Who is the GOAT?

Posted: Updated:

In the previous post, we argued that the question of ‘Who is the Greatest Of All Time?’ for any competitive game is answerable with an algorithm. They can account for the facts that skills vary over time and many players never played each other in their prime.

To demonstrate, we reviewed the mechanics of the TrueSkill algorithm, invented by a team from Microsoft Research and in response to the inability of the Elo rating system to be applied to multiplayer, multiteam games.

In this post, we’ll use a variant of this algorithm, ‘TrueSkill Through Time,’ and match data to answer the ‘Who is the GOAT?’ question for Tennis, Boxing, and Warcraft 3.

TrueSkill Through Time

As mentioned at the end of Part 1, the ‘vanilla’ TrueSkill requires some adjustment to properly answer the GOAT question. First, a player’s skill estimate only depends on past data. This is a fair condition if the skill is to be used for forecasting, but it’s suboptimal for the GOAT question. If a player beats another who later becomes a top-tier player, that player’s skill should be revised upward in retrospect. In other words, defeating Mike Tyson in his first professional bout should create a substantial skill boost to the victor.

Second, vanilla TrueSkill indexes time with matches. It treats two matches separated by three days the same as two matches separated by three years. In reality, skills evolve over time, even when no matches occur.

These issues are addressed by the TrueSkill Through Time algorithm. In this analysis, we use the implementation developed by Gustavo Landfried.

Tennis

Our first application is to tennis (as Landfried did as well). The code, available here, produces skill estimates over time:

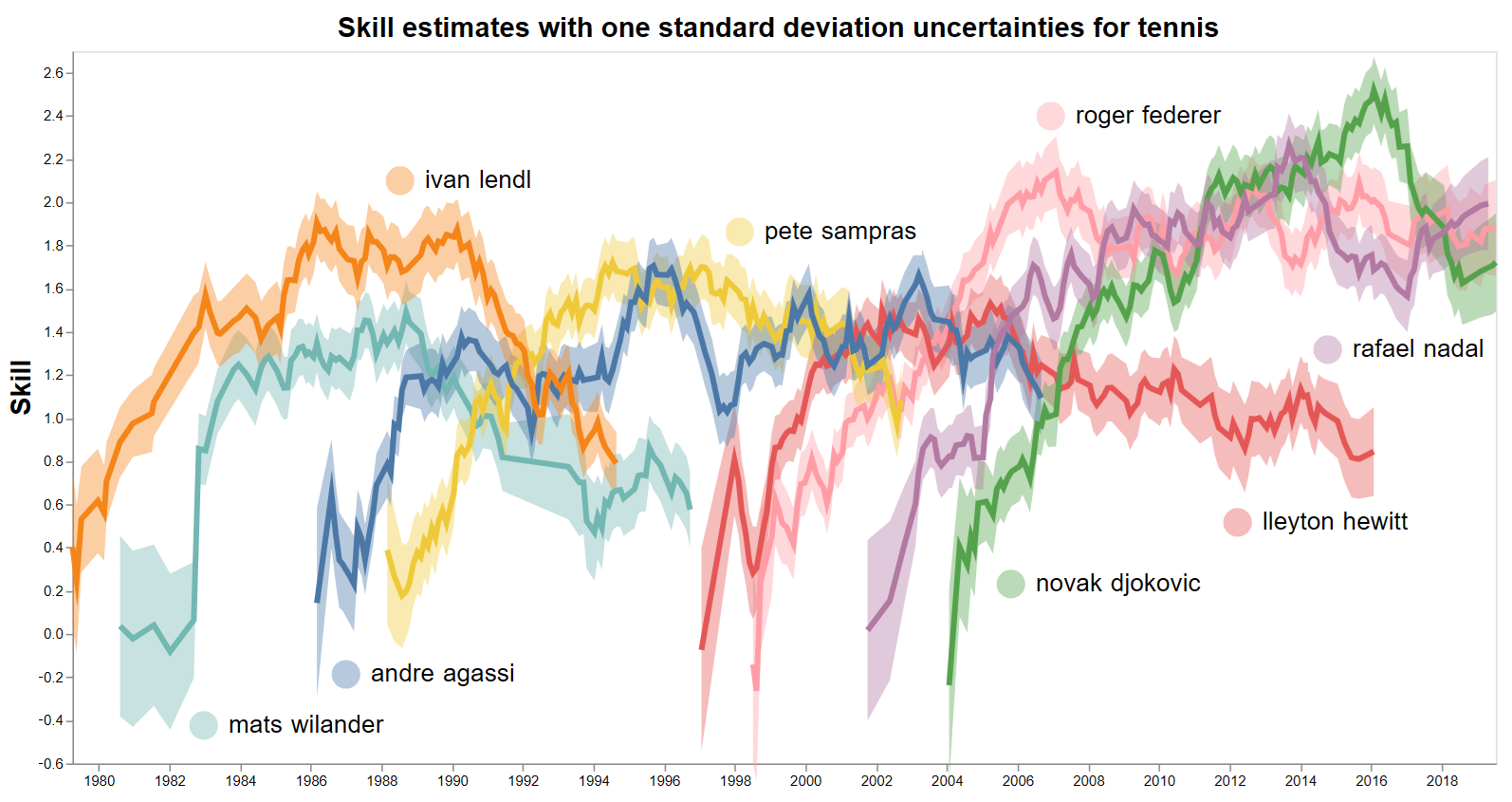

Skill estimates, along with one standard deviation uncertainty bands, for some top tennis players over the past four decades.

Skill estimates, along with one standard deviation uncertainty bands, for some top tennis players over the past four decades.

For readability, the skill estimates are shown for select top players over the past forty or so years. Specifically, they come from a dataset of 284,664 matches involving 12,307 players over the past 110 years.

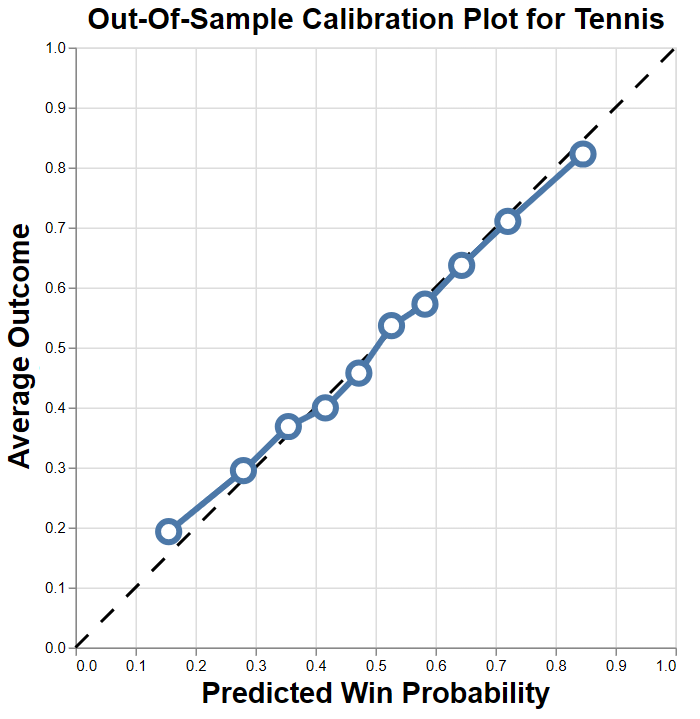

A remaining question is, ‘how do we know these estimates are any good?’ To answer that, we’ve held out the past four years of data and produced the following calibration plot.

This test-set calibration plot answers the question ‘If the model predicts a win probability of x percent, what is the actual win percentage?’ For a well calibrated model, these numbers should be close; the points plotted should fall along the diagonal line.

This test-set calibration plot answers the question ‘If the model predicts a win probability of x percent, what is the actual win percentage?’ For a well calibrated model, these numbers should be close; the points plotted should fall along the diagonal line.

The model is well calibrated, giving us confidence in its estimates1. Therefore, we can answer the GOAT question confidently:

Established with his 2016 season, Novak Djokovic is the greatest of all time. If every player played every other player in their primes, Djokovic is estimated to have the highest win probability.

As further validation, his 2016 season is noted independently as remarkable.

Warcraft 3

Warcraft 3, a classic real time strategy game with a small-but-loyal player base, may seem like an unusual choice relative to tennis and boxing. It was included primarily because it has substantial data. Some players play multiple games a day for many years. Secondarily, I personally have some nostalgia for it and enjoyed the excuse to explore the game’s data.

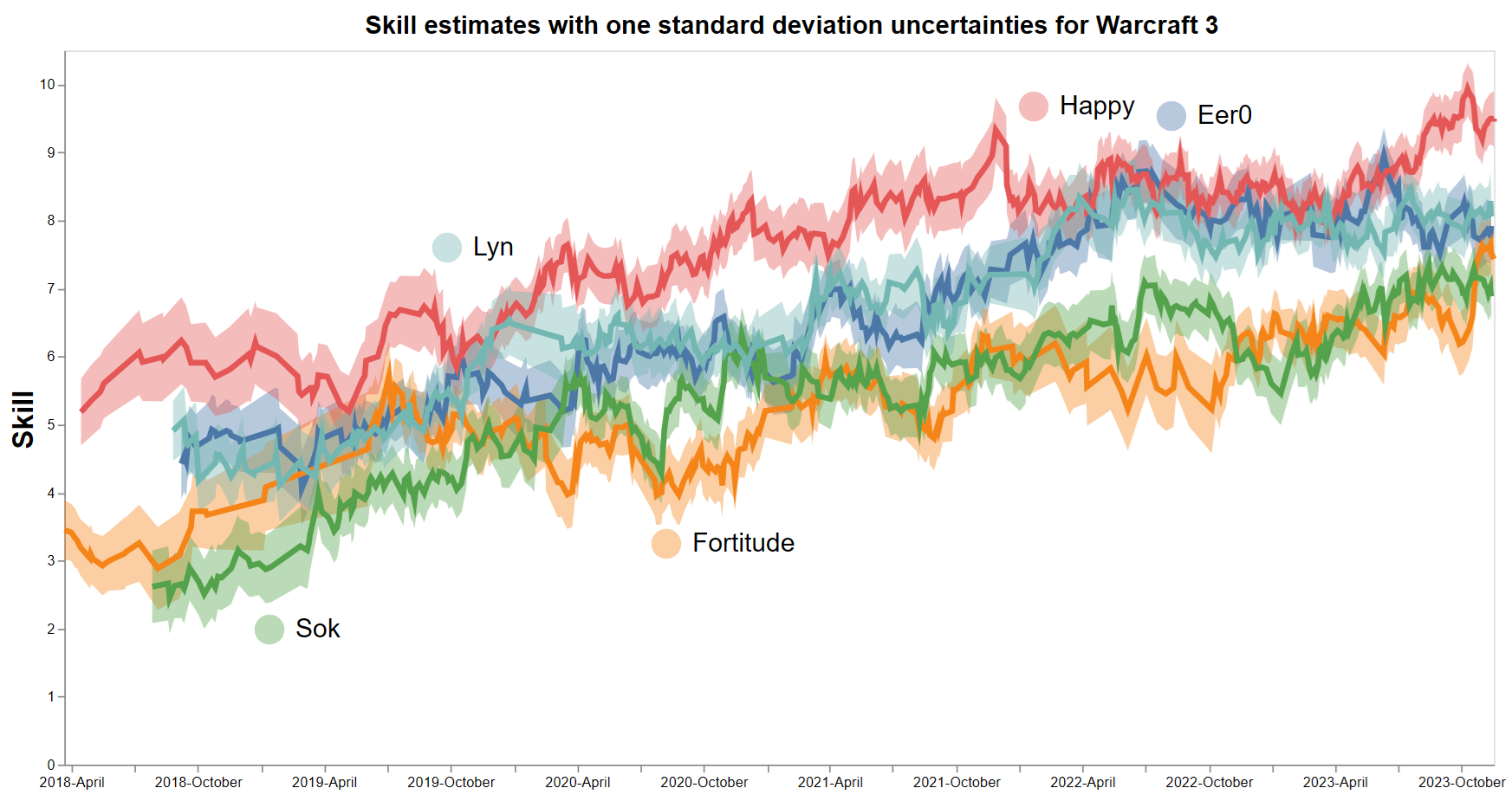

With this notebook, we produce the following skill estimates.

Skill estimates of five top players over the past 6 years.

Skill estimates of five top players over the past 6 years.

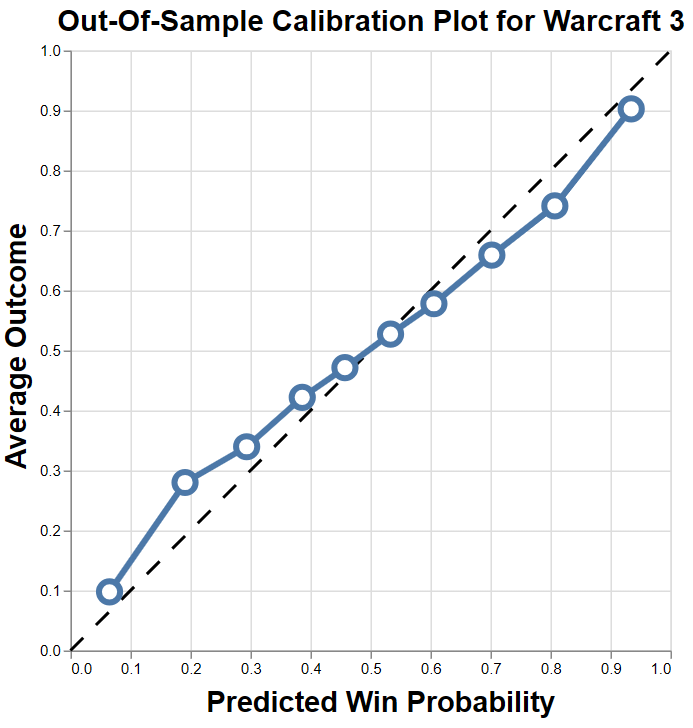

Again, we view the calibration plot to evaluate the model.

The skill estimates for Warcraft 3 are mostly well calibrated.

The skill estimates for Warcraft 3 are mostly well calibrated.

The model is slightly overconfident with predicted win probabilities between 10-30% or 70-90%. This suggests that the single hyperparameter \(\beta\) may not be flexible enough to capture the game’s noisy dynamics; the level of ‘luck’ may depend on the difference in skills.

Nonetheless, this issue would not change the answer to the GOAT question. We may say confidently:

Established in late 2021, Dmitry “Happy” Kostin is the greatest of all time.

For those experienced with the game, this is not surprising, since Happy has been dominant for nearly a decade.

Boxing

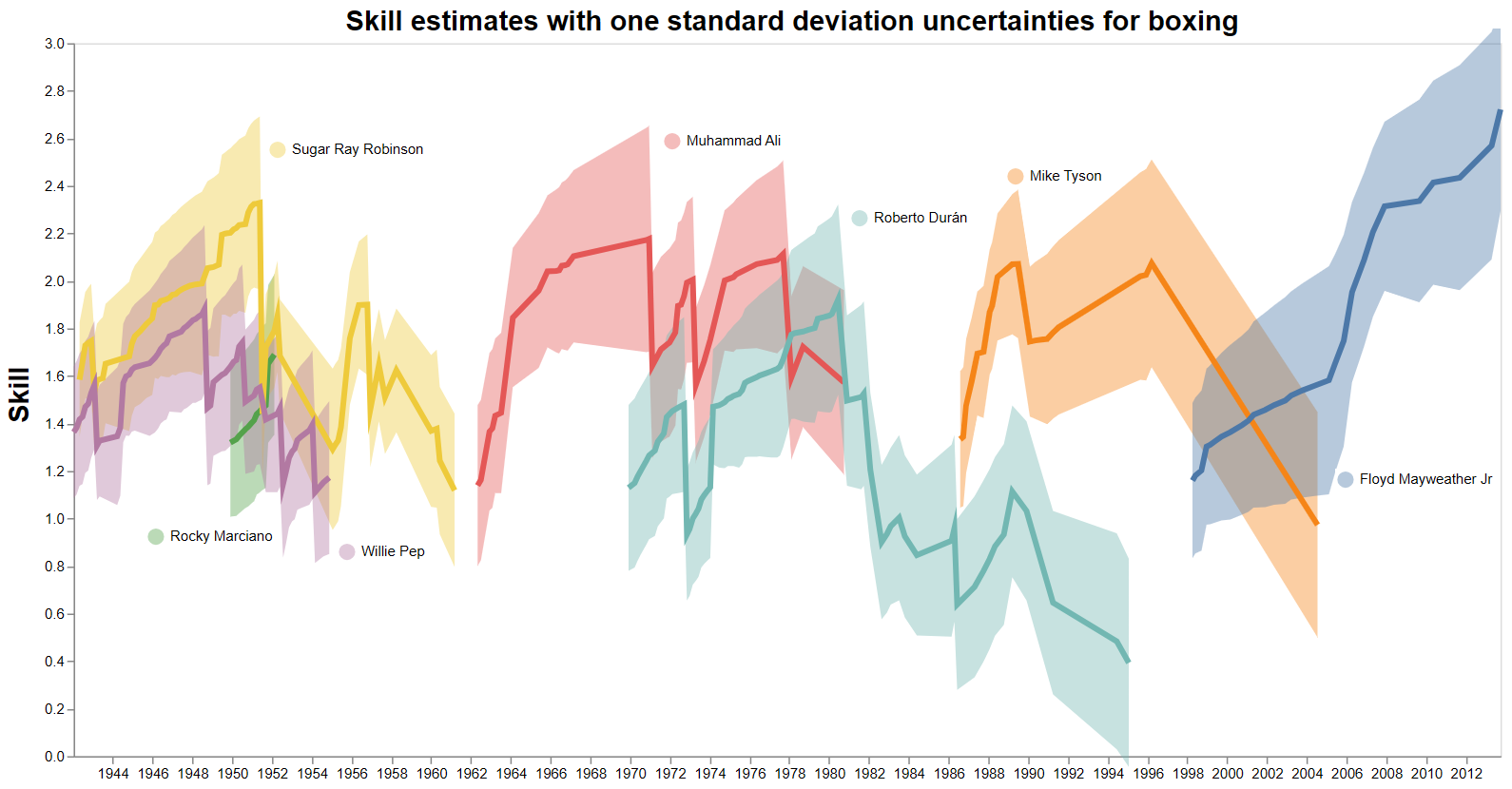

Boxing was the original motivation for the analysis, since ‘Who is the greatest boxer of all time?’ is a fiercely debated question. Rather disappointingly, the data is not as friendly. Using this notebook, we get the following skill estimates.

Skill estimates of some top boxers.

Skill estimates of some top boxers.

The uncertainty bands are significantly wider than in previous cases, and the model linearly interpolates skills over long periods without matches. This follows from the sparsity of the data; boxing matches are much rarer than tennis or Warcraft 3 matches. Over their career, a boxer will have a few dozen fights, whereas some tennis players play thousands of matches.

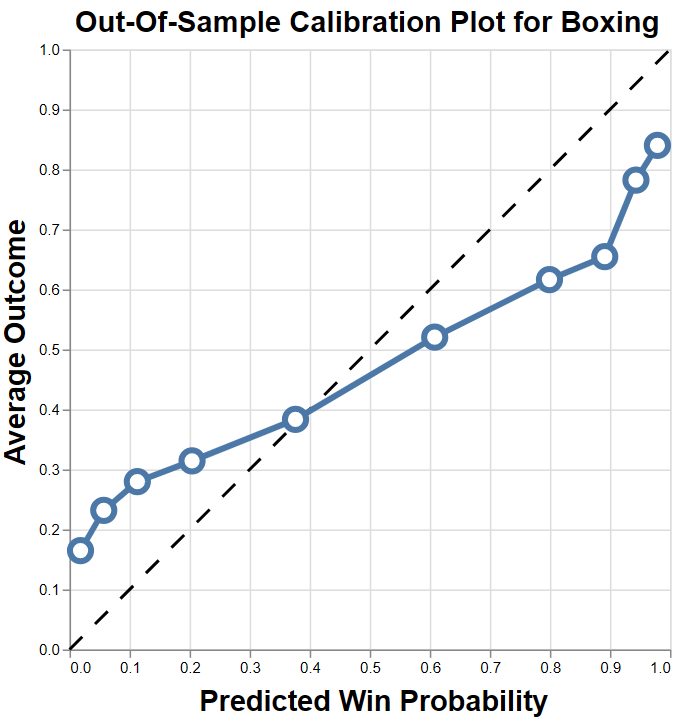

As a result of this sparsity, the model is not especially well calibrated.

The TrueSkill model for boxing is not well calibrated, but there is a strong correlation between predicted and actual win probabilities

The TrueSkill model for boxing is not well calibrated, but there is a strong correlation between predicted and actual win probabilities

Despite this, the model seems to rank skill well. According to the calibration plot, an increase in skill corresponds to an increase in test-set win probability. For this, we can have some confidence in the GOAT answer:

Due to his undefeated record, including fights with top boxers, Floyd Mayweather Jr. is the greatest of all time.

However, the application to boxing has some notable differences from tennis and Warcraft 3. First, boxing has weight classes, meaning every match is controlled for this variable. Because of this, Floyd Mayweather Jr. in his prime is unlikely to beat heavier boxers in their prime. So ‘greatest of all time’ is really a pound-for-pound greatest, since the data is controlled for weight.

Further, weight classes strongly separate the data. They represent groups within which matches happen frequently, and between which matches are rare. This severely weakens the comparability across weight classes. This is another source of incomparability of boxers from different weight classes.

In Closing

As we’ve seen, the TrueSkill Through Time algorithm—and its variants—really does allow us to tackle one of the most contentious debates in sports: Who is the GOAT? The calibration plots bolster our confidence by showing that the model does predict matches well, and its conclusions align with what seasoned observers of these sports often say. Whether it’s identifying Djokovic’s remarkable peak in tennis, Happy’s dominance in Warcraft 3, or Mayweather’s supremacy in (pound-for-pound) boxing, the results check out. Moreover, other domains, like chess, can be similarly analyzed. After all, wherever structured match data exists, so does the opportunity to learn about the greatest of all time.

Lastly, I’d like to acknowledge the depth of skill estimation. In state-of-the-art applications, much more contextual data can be incorporated than presented here and algorithms can be more finely tuned and evaluated. For those interested in finding the very best algorithm for esports skill estimation in particular, I recommend exploring the riix package, designed to evaluate and compare algorithms.

References

- P. Dangauthier, R. Herbrich, T. Minka, T. Graepel. TrueSkill Through Time: Revisiting the History of Chess In Advances in Neural Information Processing Systems. MIT Press, January 2008.

Footnotes

-

It should be noted that a good-looking calibration plot is not a complete evaluation of the model. What is missing is the proportions in which the model is giving certain predictions. A perfect model would predict only 0% and 100% and never be wrong. A poor but well calibrated model would predict 50% almost all of the time. In all games, it is unsurprising that the win rate for a random player is 50%. ↩