BentoML

Posted: Updated:

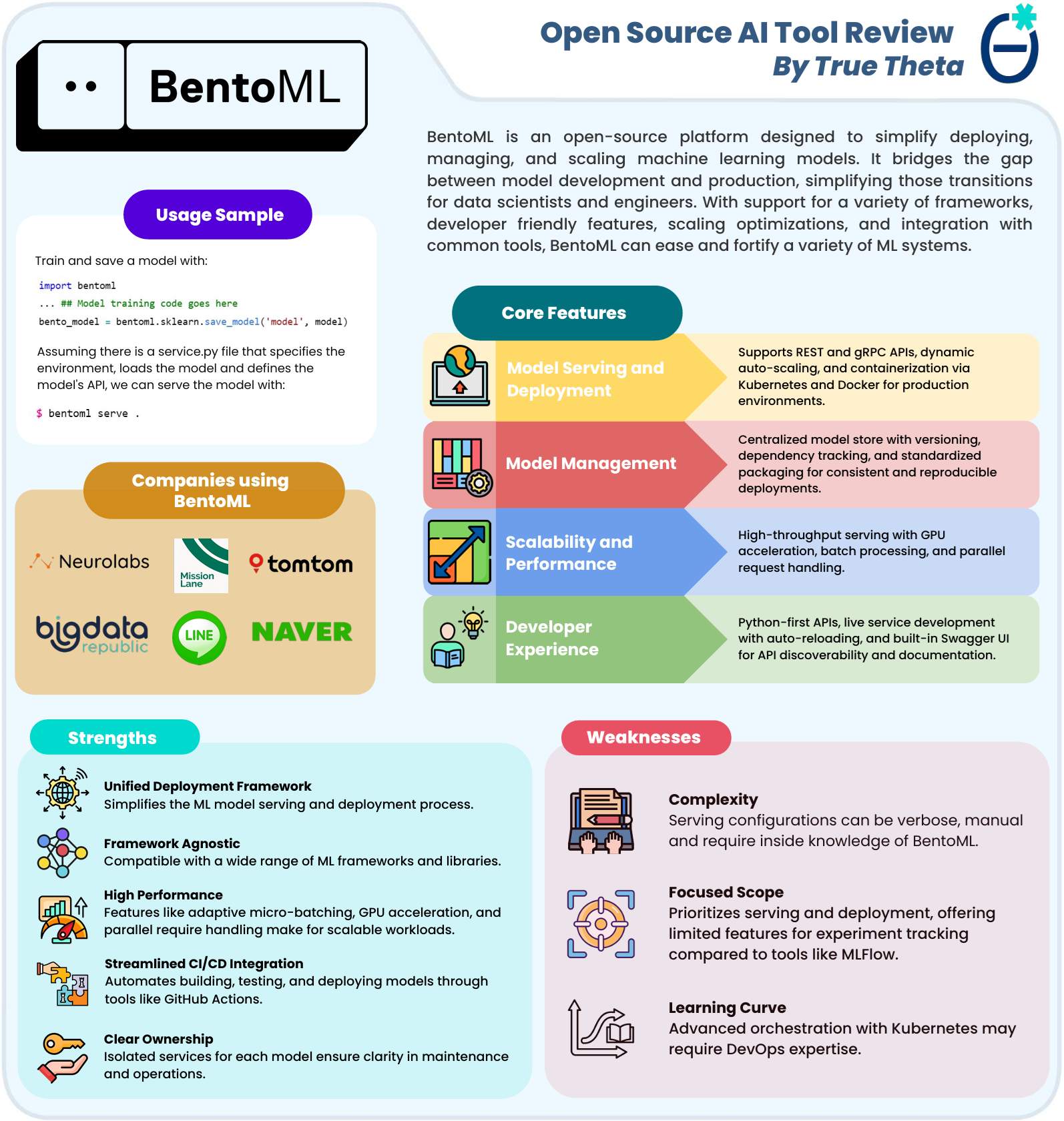

In this article, we’ll review BentoML, an open-source platform designed to simplify deploying, managing, and scaling machine learning models. At the end, we’ll provide a one-page PDF summarizing our take.

BentoML Serving and Deployment

BentoML addresses a common challenge for data scientists and engineers: the gap between model development and production deployment. It is a persistent source of breakages and frustration. Large companies invest considerable time and money into in-house solutions to minimize this misalignment. BentoML offers an open-source alternative, well tested and adopted by companies. As an example, we find Mission Lane’s BentoML blog posts to be an illustrative how-to case study.

To do this, BentoML shortens the distance between a data scientist’s model training code and a served model. As a brief illustration, we could have a save_model.py file that performs:

import bentoml

... ## Model training code goes here

bento_model = bentoml.sklearn.save_model('model', model)

Assuming there is a service.py file that specifies the environment, loads the model, and defines the model’s API, we can run the following in the same directory to serve the model:

$ bentoml serve .

The model would now be available via an API. BentoML is well tested in this operation, largely eliminating a common source of breakages. For more details, see here.

Model Management

BentoML provides a centralized model store with versioning, dependency tracking, and standardized packaging. This makes it easier to catalog models, handle their dependencies, and track their status. This is especially helpful since dependency variations are a common source of production breakages.

Scalability and Performance

For production models, BentoML offers high-throughput serving with several performance optimizations:

- Adaptive micro-batching dynamically adjusts batch size and batching intervals based on real-time request patterns. This helps minimize latency during periods of low traffic and increases throughput under higher loads, optimizing resource usage without manual tuning.

- GPU acceleration allows BentoML services to leverage GPUs for faster model inference. BentoML supports assigning specific GPUs or multiple GPUs per service, enabling efficient resource utilization and reduced latency. This is particularly beneficial for compute-intensive models, like LLMs or computer vision models.

- Parallelized request handling enables BentoML services to process requests concurrently by running multiple worker processes. This improves utilization of multi-core CPUs and GPUs by balancing throughput and resource usage according to workload requirements.

Developer Experience

BentoML prioritizes the developer experience with:

- Python-first APIs that feel natural to data scientists.

- Live service development with auto-reloading for quick iterations.

- A built-in Swagger UI for visualizing, documenting, and interacting with RESTful APIs. This is auto-generated and allows developers to explore API endpoints, test requests, and view responses in the browser.

Strengths

From our experience and surveying GitHub issues, user feedback, and industry adoption patterns, we believe BentoML’s primary strengths are:

- Unified Deployment Framework: BentoML simplifies the ML model serving and deployment process, providing a consistent workflow, reasonably independent of the underlying ML framework.

- Framework Agnostic: The platform is compatible with a wide range of ML frameworks and libraries, including scikit-learn, PyTorch, TensorFlow, Hugging Face, and others. This flexibility allows teams to use their preferred tools while maintaining a standardized deployment approach.

- High Performance: Features like adaptive micro-batching, GPU acceleration, and parallel evaluation allow served models to manage high-volume workloads.

- Streamlined CI/CD Integration: BentoML integrates well with modern development workflows, allowing for automated building, testing, and deploying of models through tools like GitHub Actions.

- Clear Ownership: The service-based architecture creates clear boundaries of responsibility, with isolated services for each model ensuring clarity in maintenance and operations.

Weaknesses

We’ve also identified some challenges users should consider:

- Complexity: Writing serving configs can be verbose, manual, and require knowledge of BentoML’s internals. Further, writing custom, non-standard models can require extensive additional code.

- Focused Scope: BentoML prioritizes serving and deployment, offering limited features for experiment tracking compared to tools like MLflow. Organizations looking for an end-to-end ML platform might need to combine BentoML with other tools for comprehensive coverage of the ML lifecycle, especially for observability.

- Learning Curve: While BentoML simplifies many aspects of model deployment, advanced orchestration with Kubernetes may still require DevOps expertise. Organizations without this expertise might face implementation challenges.

Summary

To make this discussion portable, we’ve packaged the main points into a downloadable PDF: